It’s 12am, and I’m just about to drift off to sleep. I have one last refresh on my phone before putting it on charge when I see the front page of today’s D*ily M*il pop up in my Twitter feed…

This isn’t the first headline the UK gutter press has ran in recently in relation to Google and terrorism. Last week Google’s advertising network was all over the front pages for supposedly running adverts for large brands on extremist content, as well as funding terrorists directly (which if you want to truth about I recommend Mat Bennett’s excellent rebuttal on the subject).

Once again, be it through lazy research or the need for a sensationalist headline, the tabloids have ran a story on Google aiding the discovery of websites that assist acts of terrorism and spread extremist views without having the full facts in front of them.

As someone who works in SEO (laymen’s terms: I am a marketer specialising in search engines, helping websites to appear higher in Google for keywords that deliver relevant/converting traffic) I have to say it’s a damn shame no one at the D*ily M*il thought it would be a good idea to contact someone whom works in said field for comment before running this “news”. Although it also doesn’t surprise me as letting facts get in the way of today’s headline would have made it a non-story.

So, in the name of damage control on the spreading of false information, here I am in the early hours of the morning debunking this utter rubbish with some facts.

I’ve tried to lay these out in the simplest way possible whilst using limited jargon so it makes sense to anyone and everyone.

What is Google?

To understand why any form of questionable or dangerous website can rank in Google, you must first understand what Google is and how it works.

Google is like a library of the internet. Much like back in the day when a library would keep the most extensive list of books it possibly could and make that information accessible to the public, Google crawls the web and keeps a large database of every website and web page on the internet. It does this by crawling websites, and crawls trillions of pages on billions of websites every month.

How does Google return results to users?

Google returns what it feels are the best fitting results to suit a user’s search and orders them based on over 200 different ranking factors that no one knows the exact science behind or how they interact. There are forever-evolving best practices, known dos/dont’s and theories that marketers like myself dissect and keep up-to-date with so we can take the best possible course of action in helping websites improve/retain their positioning within Google.

So why can’t Google just stop terrorist content from ranking?

As stated above, in almost all cases Google’s results are not manually reviewed and are handled by an algorithm of signals. Manually reviewing and ordering results would be next to impossible seeing as it has trillions of websites to sort through and match to trillions of searches every year, 15-20% of which have never been searched for before.

The human resource required for such a task is unrealistic in any sense, and would be no where near as efficient considering how many searches require real-time results in the modern age.

Can’t Google just tweak the algorithm instead?

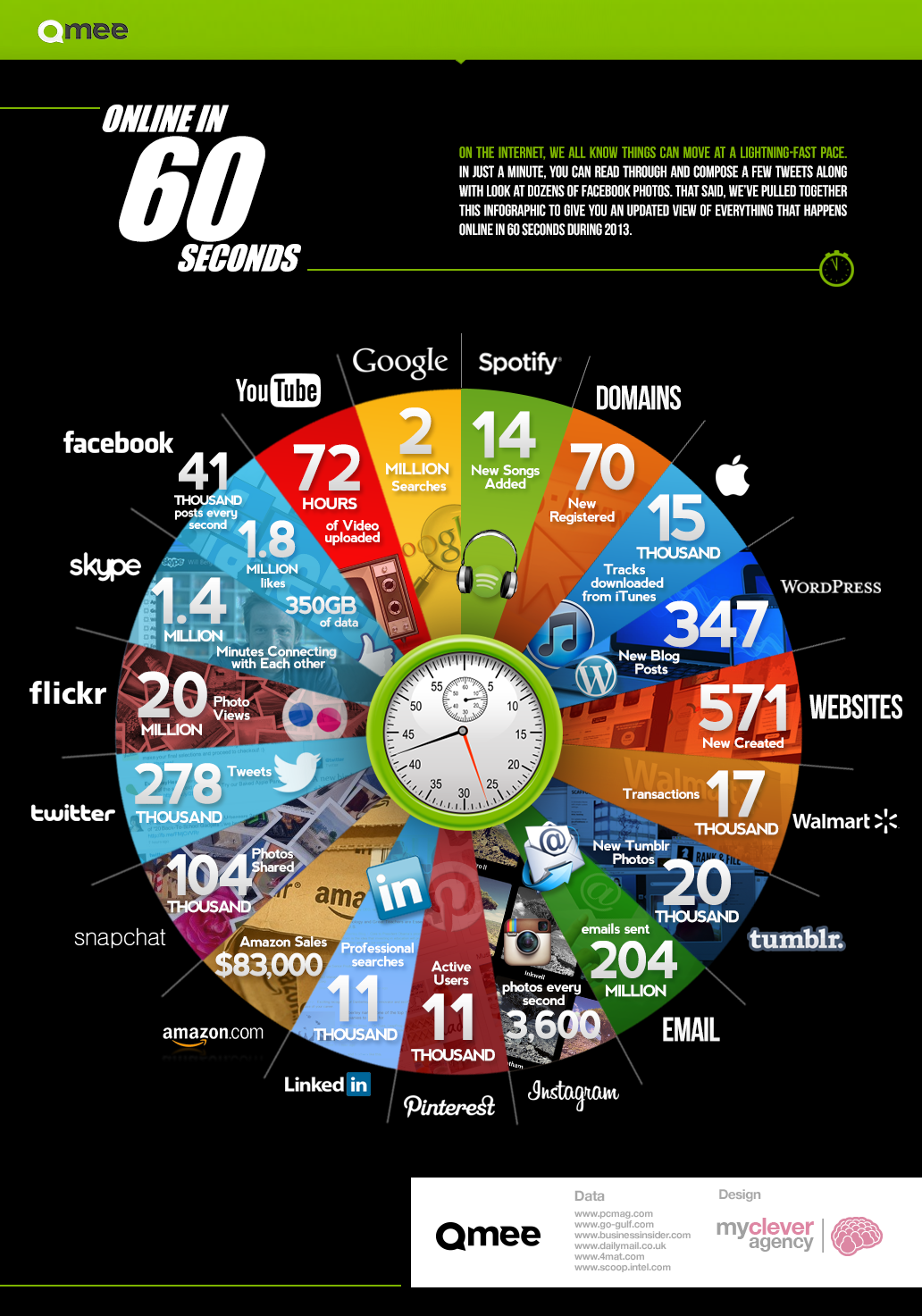

There are over 500 new websites created every minute and producing content on everything imaginable.

(Infographic via Qmee)

Google does use footprints to identify content that is and isn’t the best result for a search term, but it would be next to impossible for Google to determine what content is potentially dangerous and which is not.

Unlike the library scenario mentioned above, this makes deciding what is and isn’t displayed in search results harder. If a library decides a book should not go on their shelves, a human simply rejects the book and it isn’t stocked.

With the rate content grows and changes on the internet search engines don’t have such a luxury.

Google could not do a blanket word ban as not every piece of content or search term mentioning potential terrorist buzz words is aiming to promote/encourage terrorism. Google is still at present unable to digest the content of videos and cannot block them. Google cannot promise banning specific websites from their results will prevent users finding that content elsewhere on a new/existing website.

And even if Google did manage to put some kind of magic rule in place to block dangerous content, the sheer scale makes it pointless as you can guarantee that problematic searches/websites will slip through the cracks in one capacity or another.

The moral debate around search results

My colleague Chris Green wrote an interesting post around whether or not Google should have a moral obligation in policing their results recently and it remains a grey area in my opinion.

Google’s job is to return relevant results that will best serve the information a searcher is looking for, and if it didn’t it would be a pretty crappy search engine with no users.

Based on the above D*ily M*il headline, you know for a fact the search history of said journalists who produced the article is going to be littered with some rather grim searches and clicks. Does searching for terrorist-themed content mean the person doing that search is looking to commit an act of terror or is at risk of radicalisation?

Of course it doesn’t. There are countless reasons someone could be searching for such things; research, concern about a friend/family member, morbid curiosity, etc. Is a person browsing gun content doing so because they plan to commit a shooting? Or are they just simply interested in guns?

Therefore is it Google’s responsibility, even if they could spot a personalised footprint or pattern in search history, to say what a user can or can’t access?

I feel is still up for debate, but I’m steering more towards the “no” side. Google is a tool and it is down to the user to decide how they utilise it, much like it is not the knife manufacturer’s job to police who uses one to cut up vegetables in the kitchen and who uses one to cause others harm.

Conclusion

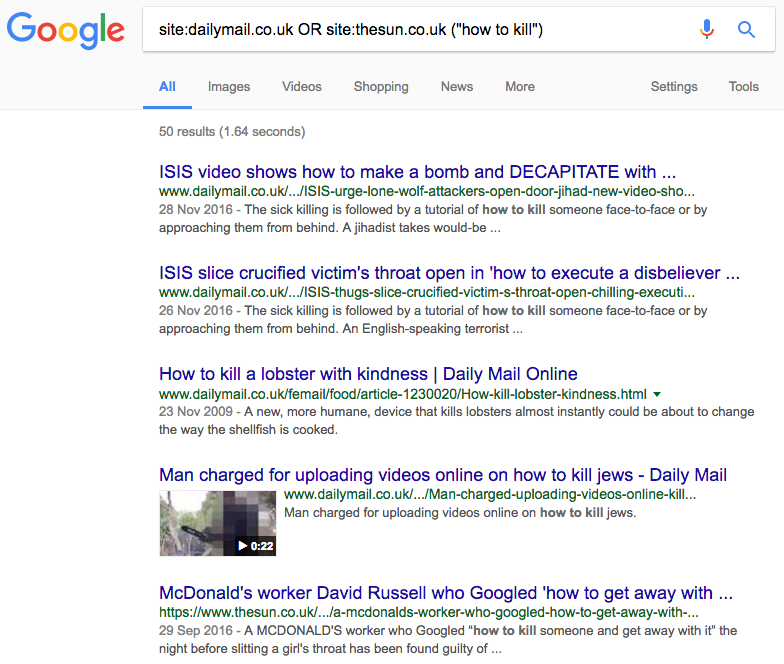

What I found most amusing is whilst writing this article when I went to fact-check some of the figures I used at least three of the searches I carried out returned a result from the D*ily M*il website, including the above infographic featured.

This proves what we already know about tabloids; they’re happy to contradict themselves over and over so long as it serves to push their agenda or sell newspapers/gain clicks.

For those whom work in the SEO field, everything I’ve written here will be nothing new to you, but for anyone else I hope this post has been insightful and you’ve gained some new knowledge. Please continue to use said information when crap like the above headline is rammed down your throat.

Oh, and one last thing, just in case you wanted to see hypocrisy in its clearest form…

Daily Mail calls Google “The Terrorists’ Friend”.

Daily Mail are using:

Google DoubleClick, AdSense, Publisher Tags, Google+, Analytics. pic.twitter.com/ckD6oe32vB— dan barker (@danbarker) March 23, 2017

UPDATE 11TH APRIL 2017: In light of some discussion I’ve had off the back of this blog post, I thought I’d address some additional points…

Google has removal requests for individuals, why not apply it here?

Google has a vast number of these requests coming in as it is, and these have to come directly from the person in question who wishes certain results to be removed from the index. This already requires a lot of human resource, and is still a drop in the ocean of a sea of web content.

If Google were to create a manual flag for content that is offensive or dangerous, it would require an impossible sized team to manage, and that’s before considering the fact a lot of content is only offensive or dangerous in the eye of the beholder, thus meaning a rule book to determine a red flag would be huge and full of grey areas.

Google can block child porn, so how come it can’t block extremist content?

One would make the strong assumption Google can identify illegal sexual or violent images via its algorithms, using the same technology behind reverse image search.

“Google accomplishes this by analysing the submitted picture and constructing a mathematical model of it using advanced algorithms. It is then compared with billions of other images in Google’s databases before returning matching and similar results.” – Wikipedia

Identifying an illegal image is not as much a grey area as extremist content. An image is an image. If it contains a shoe then it’s a picture of a shoe. If it contains a horse it’s a picture of a horse. And if it contains an image of something illegal to look at then it’s a picture of something that’s illegal to look at.

Written content on the other hand is a bit harder to identify the purpose of. Google’s AI is not smart enough (at present) to understand the context of an article to a political level. There are countless reasons outside of encouraging extremism that a site could be talking about or quoting extremist content; research purposes, news coverage, quoting a source, satire, etc.

Google’s algorithms cannot decipher those differences, therefore vetting problematic content vs unproblematic content is likely not an easy task.

Plus, you can guarantee even the measures Google does have in place to stop illegal images showing up in SERPs aren’t flawless. I’m certainly not up for proving this point, but if you want to tarnish your browsing history with some questionable searches to find out that’s your decision to make.

But Google owns Youtube and blocks content on there, why can’t it do the same in search results?

For a start, the comments about image identification also come into play here. Google cannot understand video at present without written context around it, but it can take screen grabs and identify them as images.

Secondly, Youtube has a vast number of users whom can flag content for removal. As far as I am aware from researching, a lot of Youtube moderation is via its user community.

And finally, things still slip through the cracks on Youtube and it’s far from a flawless model. Go on Youtube, search the filthiest sex-related keyword you can think of and filter the results by uploaded in the last hour if you don’t believe me…

Why not just blanket remove any sites or pages mentioning extremist buzz words from the index?

Because that would likely make the search results a pretty shitty place to look for things, which is the last thing Google wants for its end users. Ironically, if such a measure was put in place, it would be sites like the D*ily M*il and Th* S*n that innocently see a drop in indexed pages and organic traffic as they use such words in content all the time.

And this is without even beginning an argument on censorship, where the line is drawn and who makes the decisions on what is and isn’t unacceptable content. Personally, I’d rather not live in a nanny state for the sake of preventing people finding information in a search engine.

But Google seems to have no problem blocking Conservative news sources. Double standards surely?

No, Google is blocking “fake news”. Many “conservative” (I use inverted commas as I’d say most of the sites in question veer more to the far right) sites have a known track record of presenting half-baked or completely incorrect stories as fact. These are the sites being removed from search results. And why shouldn’t they be?

There are plenty of Conservative websites out there that are still accessible via Google and have a better track record of factual reporting.

Why are you so defensive of Google? Do you love them?

Google is the main reason I have a job, but I am also well aware Google is far from perfect. Here’s a few of my own criticisms to ponder over…

- Could Google do more to police its ad network? Probably.

- Should Google be paying its fair share of business tax in the countries it profits in? Certainly.

- Is the amount of data Google has on all of us as individuals and as a collective worrying? Definitely.

- Is the continual shift in PPC workings and limiting the data Google gives us a way of making it harder for businesses to make accurate marketing decisions and put more money in its own pocket? I believe so.

- Are rich snippet and knowledge graph results essentially a way of Google harvesting content for its own use and stealing valuable clicks from deserving websites? Yep.

I think I make it pretty clear in the above points I have plenty of issues with Google, but if you’re going to bash someone with a stick at least bash them for the right reasons instead of making up poorly researched ones like the original news story in question.

*I refuse to mention the D*ily M*il or link to their website on moral grounds.*

- SEO Case Study – Ear View Ltd - August 29, 2023

- SEO Case Study – Chefs Catering NorthWest - November 11, 2021

- Voice search – An opportunity or a nail in the SEO coffin? - December 13, 2017

Some good points. But you forget to address the fact that Goggle already removes results from its searches – in relation to specific individuals on request for example. So they clearly are able to control what searches return – at least for rich individuals who don’t like their past coming up in searches.

Hi Nobby, thanks for your comment.

Google does indeed have removal requests, however those relate to personal individuals and have to come via the individual in question. These already require considerable human resource to manage, and when you consider how big Google’s index is personal removal requests are a drop in the ocean of web content. There is no way Google could realistically scale personal removal requests and apply it to dangerous content. It would also be in the eye of the beholder as to what is and isn’t dangerous content; there are plenty of examples of articles on the DM site I’d consider dangerous or promoting hate, but their readership likely would not. Creating a guideline of what is and isn’t a red flag to remove content from the index would be full of grey areas.

https://www.google.com/transparencyreport/removals/europeprivacy/

This was something I considered covering in the blog post but it was 2am by this point and it is a school night, so glad you’ve brought it up 🙂

Cheers.

this is true nobby. They are working with intelligence agencies to remove breitbart and infowars from search results. They are more concerned with removing conservative opinion than removing terrorism from search engines.

I get this article above,

but its still true that people can still find that information easily. Can you find child porn easily? No because they have removed it from search engines.

Can you find terrorist manuals and best practices? Yes because Google hasnt removed it.

Why we get on our high horse about the Daily Mail again and again is tiring. They are doing the job that other newspapers dont dare touch. The Truth.

And the truth doesnt care for your opinion.

Hi Rob,

As mentioned above (and on our FB discussion) child porn is much easier for Google to spot than the context of an article. Things that are illegal are usually hidden on the dark web too as no one uploading that kind of stuff wants to be traceable, it is illegal after all.

As for removing conservative content, Google can remove specific sites from search, and I safely imagine there are plenty of known terrorist sites Google has also demoted/removed in the past. I’m sure a few Google searches can prove this.

Political opinion aside, the Daily Mail has posted a poorly researched article full of holes, and this is what this article covers. Whether you agree with their political agenda is not the discussion this blog aims to raise, but how search engines work and why terrorist content can appear in search results.

I think the terrorists also watch Top Gear to learn which cars have the most horse power. Blame the BBC too. And, no doubt you can also get this dangerous information from those dangerous LIBRARIES too! Close all the libraries! Oh, hang on, Tories already doing that. Maybe it libraries were funded properly, people would not need Google? #wishfulthinking

To quote a certain Rachel from Friends, ‘whom! Whom! Sometimes it’s Who!’

Jokes apart. Thanks. Very well return and easy to understand for the average Joe.

Banning or censuring Google is like banning a library. We don’t live in a nanny state and having moved here from one, believe me, it’s the common man that suffers. The sinister lot will always find a way around it.

Also, makes one wonder. Why was the Da* M* even googling all that. Are they in on it? Is that why they have an openly anti front, just so they can deep down incite hatred and indirectly promote terr*ism?